NVIDIA is poised to showcase groundbreaking advancements in rendering, simulation, and generative AI at SIGGRAPH 2024, the leading computer graphics conference, scheduled for July 28 – August 1 in Denver. This event is set to be a significant platform for NVIDIA to present over 20 research papers, highlighting innovations that could revolutionize synthetic data generation and inverse rendering tools, which are essential for training future models.

Pioneering Research in Synthetic Data and Rendering

NVIDIA’s research efforts are making strides in enhancing simulation quality by improving image resolution and discovering new methods to create 3D representations of real or imagined worlds. These advancements are critical for various applications, including visual storytelling, scientific research, and the simulation-based training of robots and autonomous vehicles. The research papers cover a broad spectrum of topics, from diffusion models for visual generative AI to physics-based simulation and hyper-realistic rendering powered by AI.

Enhancing Visual Generative AI with Diffusion Models

Diffusion models are a transformative tool in visual generative AI, enabling artists and designers to quickly convert text prompts into images. This technology significantly reduces the time required to visualize ideas, making it invaluable for creative industries.

One notable paper, "ConsiStory," is a collaboration between NVIDIA and Tel Aviv University. This research introduces a method called subject-driven shared attention, which drastically reduces the time needed to generate consistent images from 13 minutes to just 30 seconds. This is particularly useful for applications like comic strip illustration or storyboard development, where maintaining character consistency is crucial.

Another significant contribution is a paper on using 2D generative diffusion models for interactive texture painting on 3D meshes. This allows artists to paint in real-time with complex textures derived from any reference image, enhancing the creative process and enabling more intricate and detailed artwork.

Breakthroughs in Physics-Based Simulation

Physics-based simulation is a key area where NVIDIA is making significant progress. This technology aims to bridge the gap between physical objects and their digital counterparts, making virtual representations move and behave as they would in the real world.

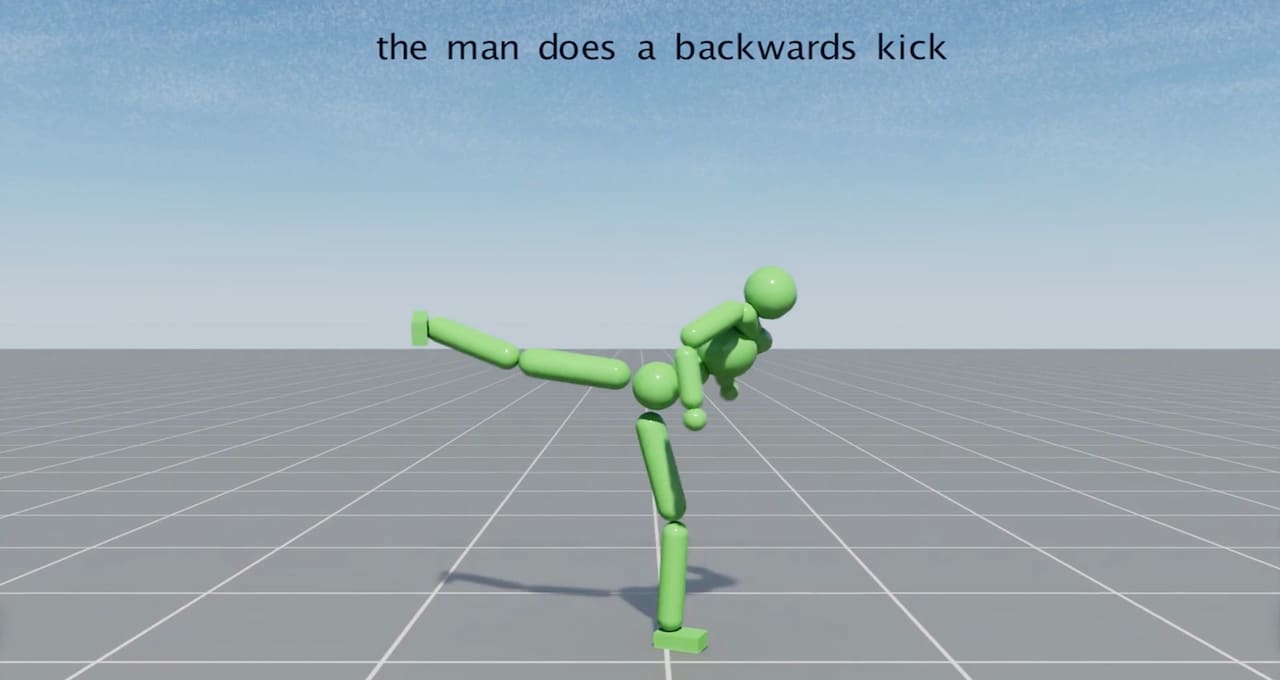

SuperPADL, a project developed by NVIDIA researchers, addresses the challenge of simulating complex human motions based on text prompts. By combining reinforcement learning and supervised learning, SuperPADL can replicate the motion of over 5,000 skills and can run in real-time on a consumer-grade NVIDIA GPU.

Another noteworthy paper discusses a neural physics method that applies AI to understand how objects behave in different environments. This method is versatile, applicable to 3D meshes, neural radiance fields (NeRFs), or solid objects generated by text-to-3D models.

In collaboration with Carnegie Mellon University, NVIDIA researchers have developed a new type of renderer that can perform thermal analysis, electrostatics, and fluid mechanics. This method, recognized as one of the top five papers at SIGGRAPH, is highly parallelizable and eliminates the need for cumbersome model cleanup, thereby speeding up engineering design processes.

Advancing Rendering Realism and Diffraction Simulation

NVIDIA is also setting new standards in rendering realism and diffraction simulation. One paper, co-authored with the University of Waterloo, addresses free-space diffraction, an optical phenomenon where light spreads out or bends around the edges of objects. This method integrates with path-tracing workflows to simulate diffraction in complex scenes up to 1,000 times faster. This technology is not only applicable to visible light but also to longer wavelengths like radar, sound, or radio waves, making it highly versatile.

Path tracing, a technique that samples numerous light paths to create photorealistic images, is another area where NVIDIA is making significant improvements. Two papers presented at SIGGRAPH introduce methods to enhance sampling quality for ReSTIR, a path-tracing algorithm developed by NVIDIA and Dartmouth College. One paper, in collaboration with the University of Utah, presents a way to reuse calculated paths, increasing the effective sample count by up to 25 times, thereby significantly boosting image quality. Another paper improves sample quality by randomly mutating a subset of the light’s path, enhancing denoising algorithms and reducing visual artifacts in the final render.

Teaching AI to Think in 3D

In addition to advancements in rendering and simulation, NVIDIA is pioneering multipurpose AI tools for 3D representations and design. One paper introduces fVDB, a GPU-optimized framework for 3D deep learning that matches the scale of the real world. fVDB provides the necessary AI infrastructure for large-scale 3D models and NeRFs, as well as for the segmentation and reconstruction of extensive point clouds.

Another significant contribution is a Best Technical Paper award winner, developed in collaboration with Dartmouth College, which introduces a unified theory for representing how 3D objects interact with light. This theory consolidates a diverse range of appearances into a single model, offering a comprehensive approach to light transport and interaction.

A collaboration with the University of Tokyo, the University of Toronto, and Adobe Research has resulted in an algorithm that generates smooth, space-filling curves on 3D meshes in real-time. This framework, which previously took hours, now runs in seconds and offers users extensive control over the output, enabling interactive design.

NVIDIA at SIGGRAPH 2024

NVIDIA’s presence at SIGGRAPH 2024 will include special events such as a fireside chat between NVIDIA founder and CEO Jensen Huang and Lauren Goode, a senior writer at WIRED, discussing the impact of robotics and AI on industrial digitalization. Additionally, NVIDIA researchers will present "OpenUSD Day by NVIDIA," a full-day event showcasing how developers and industry leaders are adopting and evolving OpenUSD to build AI-enabled 3D pipelines.

NVIDIA Research, which comprises hundreds of scientists and engineers worldwide, continues to focus on AI, computer graphics, computer vision, self-driving cars, and robotics. Their latest work can be accessed through their publications, providing a glimpse into the future of technology and its applications.

For those interested in learning more about NVIDIA’s contributions and innovations, further details can be found on their official page dedicated to SIGGRAPH 2024.

In conclusion, NVIDIA’s participation in SIGGRAPH 2024 underscores their commitment to pushing the boundaries of technology in rendering, simulation, and generative AI. Their research not only enhances the capabilities of current technologies but also paves the way for future innovations that will transform various industries.

For more Information, Refer to this article.