DeepSeek-R1: Advancing AI Reasoning with Cutting-Edge Technology

In the rapidly evolving world of artificial intelligence, breakthroughs that enhance reasoning capabilities are crucial for the development of more intelligent and autonomous systems. One such advancement is the introduction of DeepSeek-R1, a model that represents a significant leap forward in AI reasoning technology. This model, known for its state-of-the-art reasoning capabilities, offers a unique approach to problem-solving by performing multiple inference passes over a query. Instead of providing direct responses, DeepSeek-R1 utilizes a series of methods, including chain-of-thought, consensus, and search techniques, to derive the most accurate answers.

Understanding Test-Time Scaling with DeepSeek-R1

The concept of test-time scaling is central to understanding how DeepSeek-R1 operates. This approach involves using reasoning to iteratively "think" through a problem, allowing the model to produce more output tokens and extend generation cycles. As a result, the quality of the model’s responses continues to improve. This process underscores the importance of accelerated computing in meeting the demands of agentic AI inference. As DeepSeek-R1 exemplifies, significant test-time computation is essential for achieving real-time inference and delivering high-quality responses.

The Architecture and Capabilities of DeepSeek-R1

DeepSeek-R1 is a remarkable model, boasting 671 billion parameters—ten times more than many other popular open-source language models. It supports an extensive input context length of 128,000 tokens and employs an impressive number of experts per layer. Each layer of the model incorporates 256 experts, with each input token being evaluated by eight different experts simultaneously.

To deliver real-time responses, DeepSeek-R1 requires numerous graphical processing units (GPUs) with high computational performance. These GPUs must be connected with high-bandwidth and low-latency communication to efficiently route prompt tokens to all experts for inference. The NVIDIA NIM microservice, along with software optimizations, enables a single server equipped with eight H200 GPUs to run the full 671-billion-parameter DeepSeek-R1 model at an impressive rate of up to 3,872 tokens per second. This remarkable throughput is achieved using the NVIDIA Hopper architecture’s FP8 Transformer Engine at every layer, along with the 900 GB/s NVLink bandwidth for communication among the model’s experts.

The Role of Advanced Architectures in Enhancing AI

As the demand for more sophisticated AI systems continues to grow, leveraging every floating point operation per second (FLOPS) of performance from a GPU becomes critical for real-time inference. The next-generation NVIDIA Blackwell architecture promises to further enhance test-time scaling for reasoning models like DeepSeek-R1. With fifth-generation Tensor Cores capable of delivering up to 20 petaflops of peak FP4 compute performance and a 72-GPU NVLink domain optimized specifically for inference, the future of AI reasoning appears exceptionally promising.

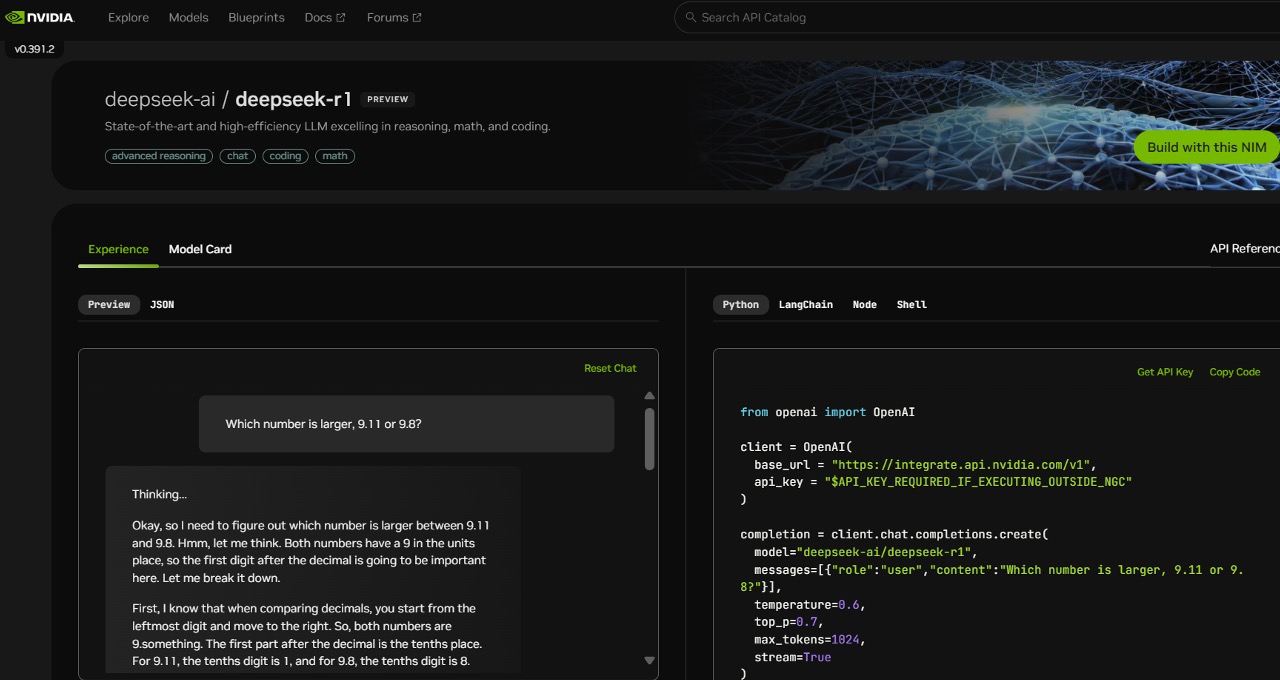

Accessing and Utilizing the DeepSeek-R1 NIM Microservice

For developers eager to explore the capabilities of DeepSeek-R1, the model is now available as an NVIDIA NIM microservice preview on the NVIDIA Build platform. This microservice allows developers to experiment with the application programming interface (API) and is expected to be available soon as part of the NVIDIA AI Enterprise software platform. By offering support for industry-standard APIs, the DeepSeek-R1 NIM microservice simplifies deployments, enabling enterprises to maximize security and data privacy by running the service on their preferred accelerated computing infrastructure.

Moreover, using NVIDIA AI Foundry in conjunction with NVIDIA NeMo software, enterprises can create customized DeepSeek-R1 NIM microservices tailored to specialized AI agents. This flexibility empowers organizations to develop AI solutions that precisely meet their unique requirements.

A Glimpse into the Future of AI Reasoning

The introduction of DeepSeek-R1 marks a significant milestone in the evolution of AI reasoning technology. By leveraging advanced architectures, extensive parameters, and innovative inference techniques, DeepSeek-R1 sets a new standard for logical inference, reasoning, mathematics, coding, and language understanding. As organizations worldwide seek to harness the power of AI, models like DeepSeek-R1 provide a promising glimpse into the future of intelligent and autonomous systems.

For those interested in experiencing the capabilities of DeepSeek-R1 firsthand, the model is available on the NVIDIA Build platform. This opportunity allows developers and enterprises to explore the potential of DeepSeek-R1 and witness its impact on agentic AI systems.

As the field of AI continues to advance, the development of models like DeepSeek-R1 underscores the importance of innovation and collaboration in driving progress. By embracing cutting-edge technology and pushing the boundaries of what is possible, the AI community is poised to unlock new possibilities and transform the way we interact with intelligent systems.

Visit NVIDIA’s official site for more information on DeepSeek-R1 and other groundbreaking AI technologies.

For more Information, Refer to this article.