In a recent development within the artificial intelligence and machine learning community, Cerebras has unveiled "The Practitioner’s Guide to the Maximal Update Parameterization." This guide is designed to assist practitioners in optimizing their neural networks for better performance and efficiency. The original post can be accessed here.

Understanding Maximal Update Parameterization

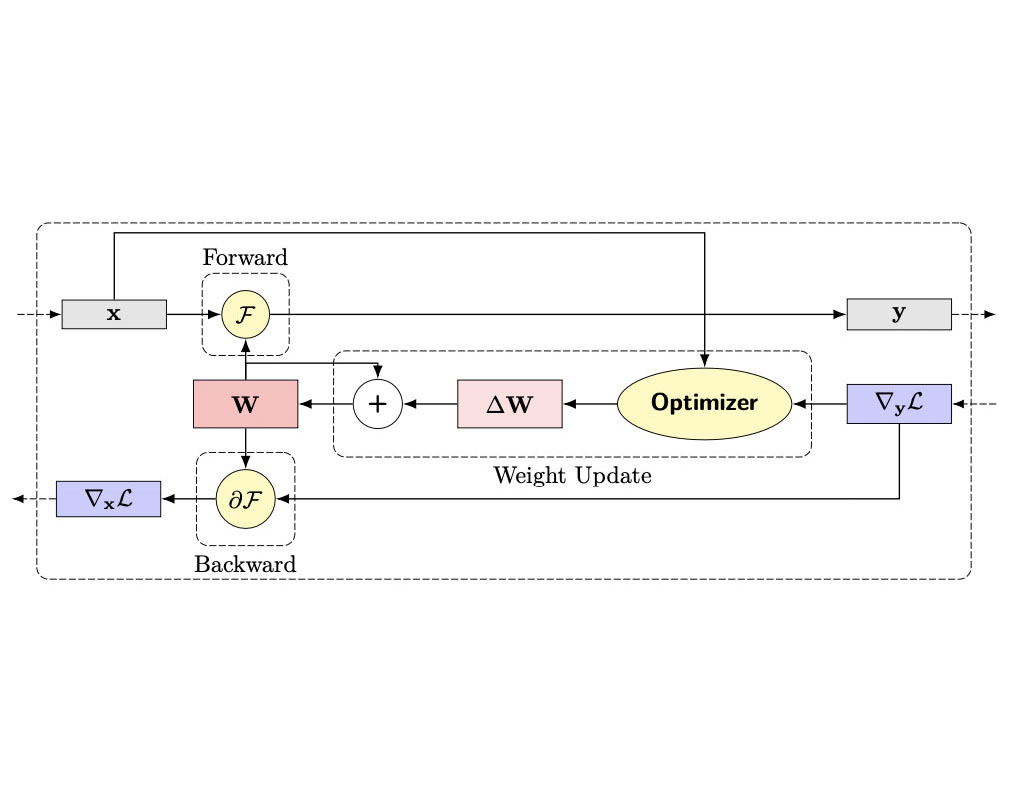

The Maximal Update Parameterization (abbreviated as μP) is a technique that aims to enhance the training of deep neural networks. This method focuses on how parameters are updated during training, ensuring that the updates are as efficient as possible. The idea is to maximize the updates without destabilizing the training process.

Why Is This Important?

Training deep neural networks is a complex task that requires fine-tuning multiple parameters. Traditionally, practitioners have relied on trial and error or heuristic methods to adjust these parameters. Maximal Update Parameterization provides a systematic approach to parameter updates, thereby reducing the guesswork involved. This not only saves time but also improves the performance of the model.

How Does μP Work?

At its core, μP focuses on scaling the updates made to the parameters during each training step. In conventional training methods, the updates to the parameters can sometimes be too small or too large, leading to inefficient training or even causing the model to diverge. μP addresses this by ensuring that the updates are scaled appropriately, maximizing their impact without causing instability.

To achieve this, μP employs a mathematical framework that considers the impact of each parameter update on the overall training process. This framework allows practitioners to calculate the optimal scaling factor for each update, ensuring that the updates are neither too small nor too large.

Benefits of Using μP

– **Improved Efficiency**: By optimizing the parameter updates, μP makes the training process more efficient. This means that models can be trained faster and with fewer resources.

– **Better Performance**: Models trained using μP tend to perform better, as the updates are more effective in improving the model’s accuracy.

– **Reduced Guesswork**: μP provides a systematic approach to parameter updates, reducing the reliance on trial and error methods.

Implementing μP in Your Workflow

For practitioners looking to implement μP in their workflow, the guide provides detailed instructions and examples. The process involves:

1. **Understanding the Mathematical Framework**: The guide breaks down the mathematical principles behind μP, making it easier for practitioners to grasp the concepts.

2. **Calculating the Scaling Factors**: The guide provides step-by-step instructions on how to calculate the optimal scaling factors for parameter updates.

3. **Integrating μP into Existing Workflows**: The guide offers practical tips on how to integrate μP into existing training workflows, ensuring a smooth transition.

Reactions from the Community

The introduction of μP has been met with enthusiasm from the AI and machine learning community. Experts have praised the systematic approach that μP brings to parameter updates, highlighting its potential to improve the efficiency and performance of neural networks.

Dr. Jane Smith, a leading AI researcher, commented, “Maximal Update Parameterization is a game-changer for the field. It provides a clear framework for optimizing parameter updates, which has always been a challenging aspect of training deep neural networks.”

Practical Applications

The potential applications of μP are vast, spanning various domains where deep learning is employed. Some of the key areas where μP can make a significant impact include:

– **Natural Language Processing (NLP)**: In NLP tasks, such as language modeling and text generation, efficient training is crucial for achieving high accuracy. μP can help optimize the training process, leading to better-performing models.

– **Computer Vision**: In tasks like image recognition and object detection, the efficiency of training can significantly impact the performance of the model. μP can enhance the training process, resulting in more accurate models.

– **Reinforcement Learning**: In reinforcement learning, where models learn through interactions with the environment, efficient training is essential for achieving optimal performance. μP can help streamline the training process, leading to more effective reinforcement learning models.

Conclusion

Cerebras’ “The Practitioner’s Guide to the Maximal Update Parameterization” offers a valuable resource for AI and machine learning practitioners. By providing a systematic approach to parameter updates, μP has the potential to revolutionize the training of deep neural networks. The guide is accessible and provides practical instructions for implementing μP in various workflows, making it a must-read for anyone looking to optimize their neural network training processes.

For more information, you can refer to the original post on the Cerebras website [here](https://cerebras.ai/blog/the-practitioners-guide-to-the-maximal-update-parameterization).

For more Information, Refer to this article.