Transforming Robotics with NVIDIA’s Advanced Simulation Technologies

The field of autonomous robotics is experiencing a significant transformation, driven by scalable simulation technologies that streamline development processes and cut costs. Central to this evolution is the Universal Scene Description (OpenUSD), a robust and interoperable framework that empowers the creation of virtual worlds. These virtual environments are crucial for developing robotics technology, allowing machines to learn and adapt as they operate within simulated scenarios that closely mimic real-world conditions.

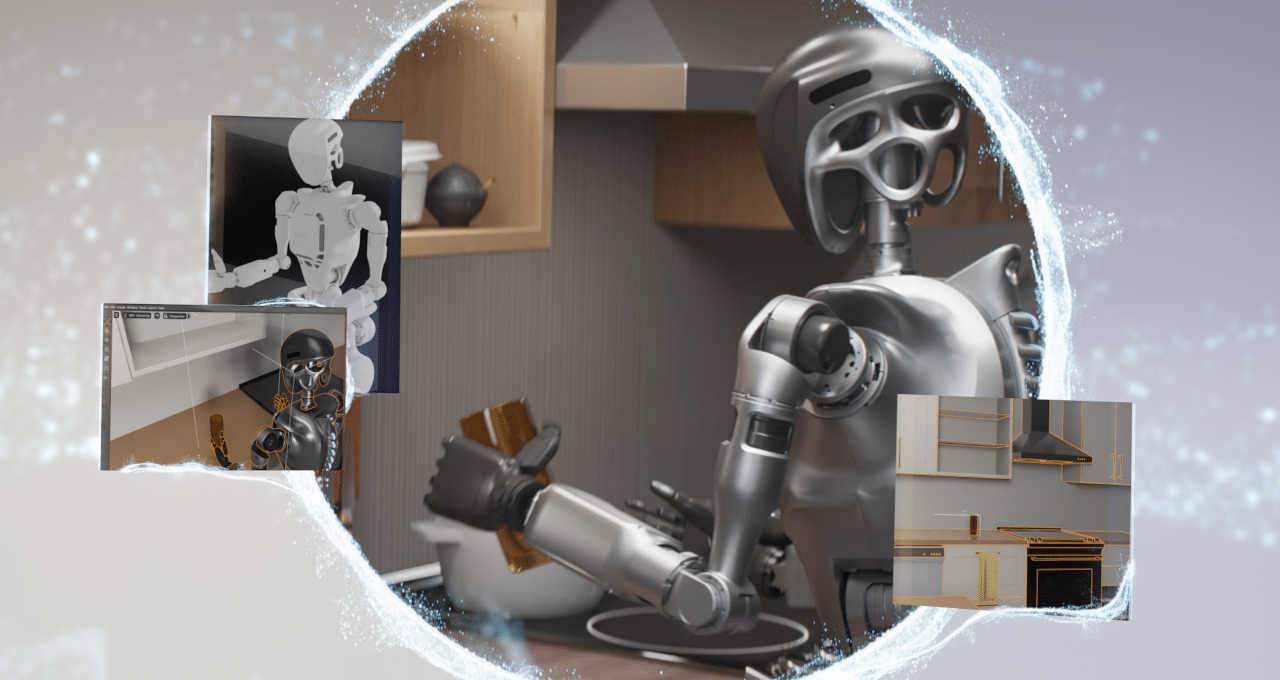

NVIDIA’s innovations extend to the Isaac Sim platform, a powerful application designed to facilitate the simulation and testing of AI-driven robots. Built on the NVIDIA Omniverse platform, Isaac Sim provides developers with the tools necessary to simulate robots in realistic virtual settings. This capability is crucial for refining AI models that drive robotic functions, ultimately enhancing the performance and deployment speed of robots across various applications.

During the AWS re:Invent event, NVIDIA announced the availability of Isaac Sim on Amazon EC2 G6e instances, powered by NVIDIA L40S GPUs. This collaboration is a game-changer, significantly boosting the performance and accessibility of Isaac Sim. The enhanced computational power allows for more scalable and efficient robotics simulations, making it easier for organizations to undertake complex simulations that would otherwise be resource-intensive.

The Role of Synthetic Data in Robotics Simulation

In the realm of robotics, companies like Cobot, Field AI, and Vention are leveraging Isaac Sim to simulate and validate robotic performance. Additionally, firms such as SoftServe and Tata Consultancy Services are utilizing synthetic data to train AI models for a range of robotics applications. This synthetic data is generated through simulations, providing a wealth of information for AI models that power autonomous machines.

Historically, robotics experiments required extensive physical trials, which were both costly and labor-intensive. Simulation technology has revolutionized this process by creating precise virtual environments where robots can learn and improve through trial and error. This method not only accelerates the development of AI algorithms but also ensures that robots can be tested safely and efficiently, without the risks associated with real-world testing.

Physical AI, a term that denotes AI models capable of understanding and interacting with the physical world, represents the next frontier in autonomous robotics. These systems include self-driving vehicles, industrial robots, and even infrastructure managed by robotic systems. The development of physical AI relies heavily on robotics simulation, a process that forms a fundamental part of what is known as the "three computer solution" in robotics. This approach allows engineers and researchers to design, test, and refine systems in a controlled virtual environment, reducing the costs and risks associated with physical prototyping.

Enhancing Robotics with Synthetic Data and Simulation

The generation of synthetic data is crucial for overcoming the challenges of limited or inaccessible datasets needed for training AI models, particularly in fields like computer vision. By utilizing synthetic data, developers can create comprehensive datasets that enhance the accuracy and effectiveness of AI models. For instance, action recognition models, which are essential for understanding human movements, benefit significantly from synthetic data generation.

Moreover, NVIDIA has introduced a new reference workflow that accelerates the creation of synthetic 3D datasets using generative AI and OpenUSD NIM microservices. This integration streamlines the process from scene creation to data augmentation, enabling faster and more accurate AI model training. Developers interested in learning more about creating human action recognition video datasets with Isaac Sim can explore NVIDIA’s technical blog, which provides detailed guidance on scaling action recognition models using synthetic data.

Simulating Humanoid Robots with Isaac Lab

Humanoid robots, representing the forefront of embodied AI, present unique challenges that sit at the intersection of mechatronics, control theory, and artificial intelligence. Simulation plays a pivotal role in overcoming these challenges by offering a safe, cost-effective platform for training and testing humanoids. NVIDIA’s Isaac Lab, an open-source framework built on Isaac Sim, allows developers to train humanoid robot policies at scale through simulations. This approach is being adopted by leading commercial robot makers to manage complex movements and interactions.

Project GR00T, an ongoing research initiative by NVIDIA, is at the forefront of developing workflows that facilitate the generation of simulation-ready environments in OpenUSD. These innovations are critical for training generalist robots capable of manipulation, locomotion, and navigation. Recent research from Project GR00T highlights the use of advanced simulation to train interactive humanoids, showcasing the development of a unified controller for physically simulated humanoids known as MaskedMimic. This system enables a wide range of motions across diverse terrains, driven by intuitive user-defined intents.

Digital Twins and AI Training Simplification

Across various industries, partners are utilizing Isaac Sim, Isaac Lab, Omniverse, and OpenUSD to design, simulate, and deploy more intelligent autonomous machines. Companies like Agility are using Isaac Lab to create simulations that allow robotic behaviors developed in virtual settings to transfer seamlessly to physical robots. This capability enhances the intelligence, agility, and robustness of robots when deployed in real-world environments.

Similarly, Cobot leverages Isaac Sim to optimize logistics in sectors such as warehousing and manufacturing, while Cohesive Robotics integrates Isaac Sim into its Argus OS framework to develop robotic workcells for high-mix manufacturing settings. Field AI uses Isaac Sim and Isaac Lab to test its models in complex environments, spanning industries like construction, oil and gas, and mining.

Furthermore, Fourier employs NVIDIA Isaac Gym and Isaac Lab to train its GR-2 humanoid robot, utilizing reinforcement learning and advanced simulations to enhance adaptability and real-world performance. Companies like Galbot and Standard Bots are also integrating Isaac Sim into their processes, verifying data generation and simulating robotic performance in various applications.

Engaging with OpenUSD for Robotics Development

NVIDIA is committed to supporting robotics developers through initiatives like livestream office hours and study groups, where experts offer technical guidance on using Isaac Sim and Isaac Lab. For those new to simulating robots, NVIDIA’s Deep Learning Institute offers a free course to help developers get started.

In addition to these resources, NVIDIA provides a self-paced training curriculum for optimizing OpenUSD workflows. These courses are designed for 3D practitioners and developers, offering a comprehensive introduction to OpenUSD. To stay updated with the latest developments in AI and graphics, enthusiasts can subscribe to NVIDIA news, join the community, and follow NVIDIA Omniverse on various social media platforms. For more insights into NVIDIA’s advancements, tuning into the CES keynote by NVIDIA’s founder and CEO is highly recommended.

In summary, NVIDIA’s advancements in simulation technologies are revolutionizing the field of autonomous robotics. By providing powerful tools and platforms like Isaac Sim and OpenUSD, NVIDIA is enabling developers to create, test, and refine AI models in virtual environments, paving the way for smarter and more efficient robotic systems.

For more Information, Refer to this article.