Harnessing the Power of Local Large Language Models with Docker Model Runner

In the rapidly evolving world of technology, Artificial Intelligence (AI) is becoming an integral part of modern applications. However, deploying large language models (LLMs) locally remains a complex task. Developers often face challenges such as selecting the appropriate model, dealing with hardware intricacies, and optimizing for performance. Yet, there is a growing demand from developers to run these models locally for development, testing, or even offline scenarios. Enter Docker Model Runner, a tool designed to simplify this process.

Introducing Docker Model Runner

Docker Model Runner is now available in Beta and comes bundled with Docker Desktop 4.40 for macOS on Apple silicon. This tool allows developers to effortlessly pull, run, and experiment with LLMs on their local machines, eliminating the need for intricate infrastructure and setup procedures. The initial beta release of Model Runner offers several key features:

- Local LLM Inference: Powered by an integrated engine built on the llama.cpp framework, Model Runner exposes an API compatible with OpenAI, facilitating seamless local inference.

- GPU Acceleration: Specifically optimized for Apple silicon, the inference engine runs directly as a host process, leveraging the capabilities of the hardware.

- Model Distribution and Reusability: A growing repository of popular, ready-to-use models is available as standard OCI artifacts, enabling easy distribution and reuse across existing container registry infrastructures.

Enabling Docker Model Runner for Local LLMs

Docker Model Runner is enabled by default as part of Docker Desktop 4.40 for macOS on Apple silicon. For users who may have disabled it, re-enabling is straightforward through the command-line interface (CLI):

bash<br /> docker desktop enable model-runner<br />By default, Model Runner is accessible through the Docker socket on the host or via the model-runner.docker.internal endpoint for containers. For those wishing to interact via TCP from a host process, especially when directing an OpenAI SDK from within your codebase, you can enable this with:

bash<br /> docker desktop enable model-runner --tcp 12434<br />Exploring the Command Line Interface (CLI)

The Docker Model Runner CLI is reminiscent of working with containers, though there are nuances in the execution model. For demonstration, let’s consider using a small model to ensure compatibility with limited hardware resources, thus providing a swift user experience. For instance, we can use the SmolLM model, as published by HuggingFace.

To start, you need to pull a model. Similar to Docker Images, if a specific tag is omitted, it defaults to the latest version. However, specifying the tag is advisable for clarity:

bash<br /> docker model pull ai/smollm2:360M-Q4_K_M<br />This command pulls the SmolLM2 model, which features 360 million parameters and 4-bit quantization. The tagging scheme for models distributed by Docker reflects the model’s metadata:

plaintext<br /> {model}:{parameters}-{quantization}<br />After pulling the model, you can execute it by posing a question:

bash<br /> docker model run ai/smollm2:360M-Q4_K_M "Give me a fact about whales."<br />For example, the model might respond with a fact about whales being remarkable marine creatures with unique body structures enabling them to swim efficiently. However, it’s important to note that while LLMs can generate fascinating responses, they might not always be accurate or predictable. Therefore, verifying the model’s output is crucial, especially with smaller models that have limited parameters or low quantization values.

Technical Insights into Model Runner Execution

Upon running the

docker model runcommand, the process involves more than just invoking a container. Instead, it calls an Inference Server API endpoint hosted by Docker Desktop’s Model Runner, providing an OpenAI-compatible API. This interaction uses llama.cpp as the inference engine, which operates as a native host process. The engine loads the requested model on demand, performs the inference, and retains the model in memory until another model is requested or a 5-minute inactivity timeout is reached.Interestingly, you don’t need to run

docker model runbefore interacting with a specific model from a host process or within a container. Model Runner dynamically loads the requested model, provided it has been previously pulled and is locally available.Integrating Model Runner into Application Code

Model Runner exposes an OpenAI endpoint accessible via:

- For Containers:

http://model-runner.docker.internal/engines/v1 - For Host Processes:

http://localhost:12434/engines/v1(assuming TCP host access is enabled on the default port 12434).This endpoint allows integration with any OpenAI-compatible clients or frameworks. For example, using Java and LangChain4j, you can configure the Model Runner OpenAI endpoint as the base URL and specify the model to use, following the Docker Model addressing scheme:

java<br /> OpenAiChatModel model = OpenAiChatModel.builder()<br /> .baseUrl("http://localhost:12434/engines/v1")<br /> .modelName("ai/smollm2:360M-Q4_K_M")<br /> .build();<br /> <br /> String answer = model.chat("Give me a fact about whales.");<br /> System.out.println(answer);<br />Ensure the model is already present locally for the code to function correctly.

Discovering More Models

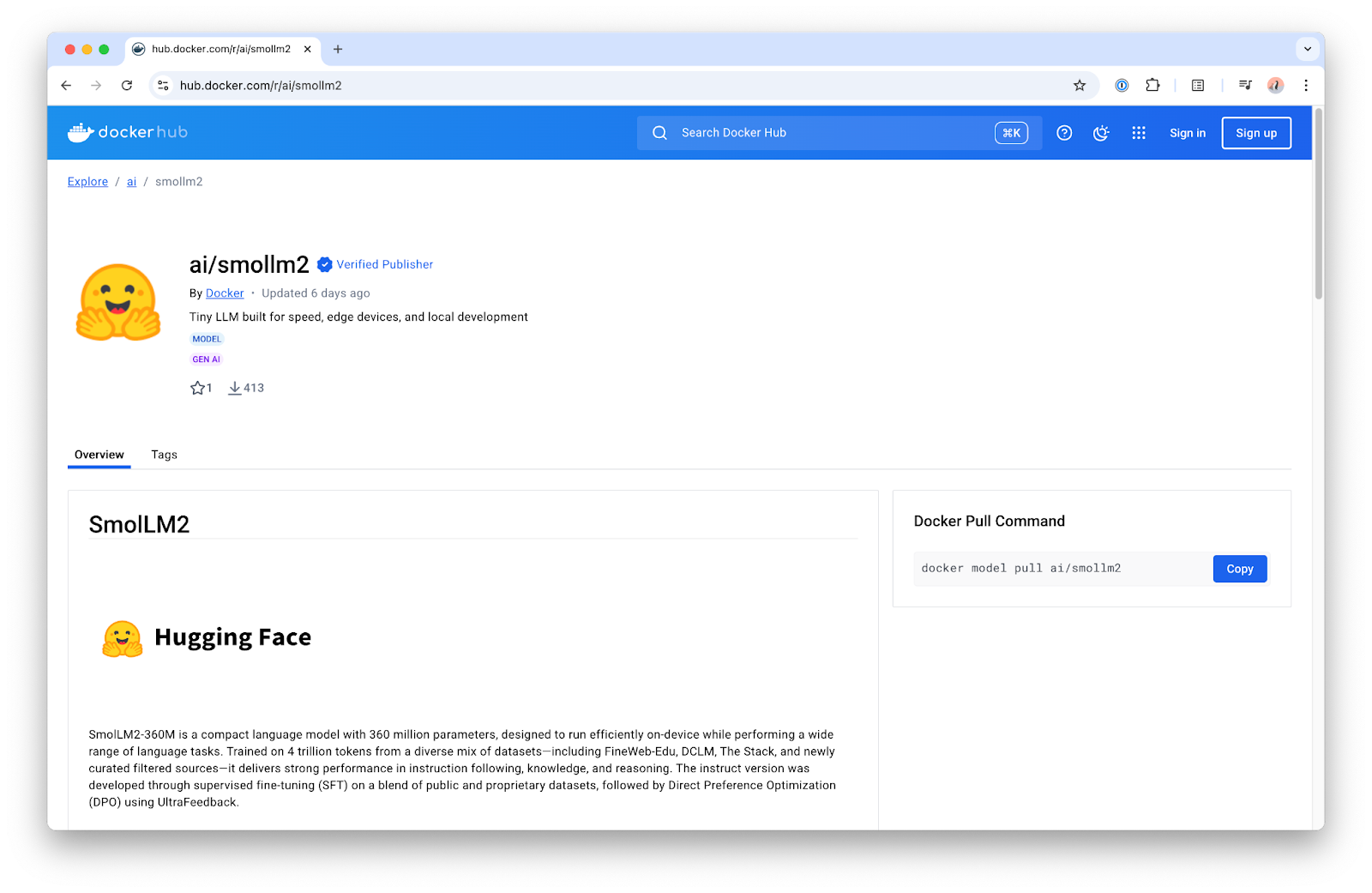

While SmolLM models are a starting point, Docker Model Runner supports a variety of other models. To explore available models, visit the ai/ namespace on Docker Hub. Here, you’ll find a curated list of popular models suitable for local use cases, available in different configurations to match varying hardware and performance needs. Each model’s overview page provides detailed information in the model card.

What Lies Ahead?

This introduction is just the beginning of what Docker Model Runner can accomplish. Exciting features are in development, and we’re eager to see how developers leverage this tool in their projects. For practical guidance, watch our latest YouTube tutorial on running LLMs locally with Model Runner. Stay tuned to our blog for updates, tips, and in-depth explorations as we continue to enhance Model Runner’s capabilities.

For more information, please refer to the original announcement on Docker’s blog.

Resources

To dive deeper into using Docker Model Runner, explore the following resources:

- Docker Model Runner Documentation

- GitHub Repository for llama.cpp

- Docker Hub

This comprehensive guide aims to equip developers with the necessary insights to effectively utilize Docker Model Runner for local LLM deployments, paving the way for new and innovative applications in the AI ecosystem.

For more Information, Refer to this article.

![Samsung’s Breakthrough Fuels Progress in Science and Industry: Interview How Samsung’s Engineering Feat Became a Catalyst for Scientific and Industry Advancement [Interview on Real Quantum Dots Part 2.]](https://www.hawkdive.com/media/samsung-tvs-and-displays-samsung-quantum-dots-technology-qled-tvs-quantum-dots-experts-interview-par-218x150.jpeg)