Amazon EC2’s New Trn2 Instances and Trn2 UltraServers: A Leap in ML Training and Inference Performance

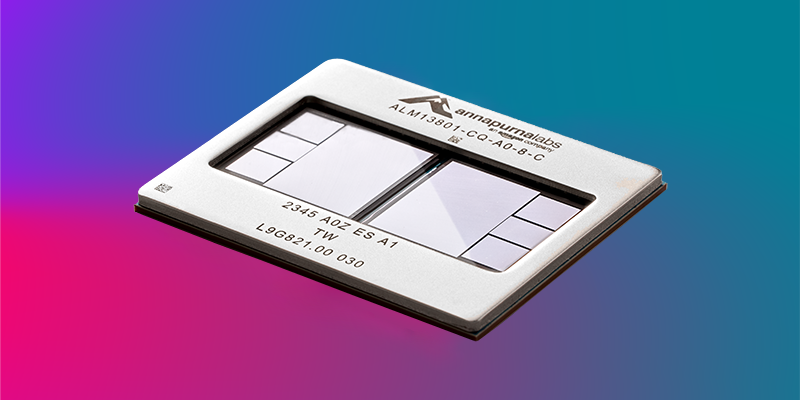

Amazon has unveiled its latest offerings in the field of cloud computing, particularly designed to enhance machine learning (ML) tasks. The new Amazon Elastic Compute Cloud (EC2) Trn2 instances and Trn2 UltraServers represent the cutting-edge of EC2 compute options, providing unparalleled speed and efficiency for ML training and inference. These new instances are powered by the second generation of AWS Trainium chips, known as AWS Trainium2, which significantly outperform their predecessors.

Enhanced Performance with Trainium2 Chips

The Trn2 instances are a major upgrade over the first-generation Trn1 instances. They are four times faster and offer four times more memory bandwidth. Additionally, they boast three times more memory capacity, which translates into 30-40% better price performance compared to the existing GPU-based EC2 P5e and P5en instances. This is a game-changer for businesses that rely heavily on machine learning workloads, as it offers more power at a reduced cost.

Each Trn2 instance is equipped with 16 Trainium2 chips, 192 virtual CPUs (vCPUs), 2 TiB of memory, and a 3.2 Tbps Elastic Fabric Adapter (EFA) v3 network bandwidth, which provides up to 50% lower latency than previous generations. This configuration ensures that Trn2 instances can handle even the most demanding ML tasks with ease.

Trn2 UltraServers: Pushing the Boundaries

The introduction of Trn2 UltraServers marks a new era in compute offerings. These servers feature 64 Trainium2 chips interconnected with a high-bandwidth, low-latency NeuronLink interconnect. This setup is specifically optimized for peak inference and training performance on frontier foundation models, making them ideal for large-scale ML applications.

Already, tens of thousands of Trainium chips are powering Amazon and AWS services. For instance, more than 80,000 AWS Inferentia and Trainium1 chips were utilized to support the Rufus shopping assistant during the most recent Prime Day. The new Trainium2 chips are also powering latency-optimized versions of advanced AI models, such as Llama 3.1 405B and Claude 3.5 Haiku models, on Amazon Bedrock.

Scaling Up and Scaling Out

The sustained growth in the size and complexity of ML models necessitates innovative compute power and architectures. In the past, scalability was achieved through either scaling up (using a bigger computer) or scaling out (using more computers). However, with the introduction of Trainium2 chips and Trn2 instances, both models apply at different levels of the hierarchy.

The Building Blocks: NeuronCores

At the core of the Trainium2 chip is the NeuronCore. Each third-generation NeuronCore includes several components:

- Scalar Engine: Handles one input to one output processing.

- Vector Engine: Manages multiple inputs to multiple outputs.

- Tensor Engine: Facilitates systolic array multiplication, convolution, and transposition.

- GPSIMD Core: A general-purpose single instruction multiple data core.

Each Trainium2 chip houses eight NeuronCores and 96 GiB of High Bandwidth Memory (HBM), capable of 2.9 TB/second of bandwidth. The cores can be used individually or grouped into logical cores, achieving up to 1.3 petaflops of dense FP8 compute and 5.2 petaflops of sparse FP8 compute. This is made possible by optimized memory bandwidth utilization.

From Trn2 Instances to UltraServers

A single Trn2 instance comprises 16 Trainium2 chips, totaling 128 NeuronCores, 1.5 TiB of HBM, and 46 TB/second of HBM bandwidth. This setup delivers up to 20.8 petaflops of dense FP8 compute and 83.2 petaflops of sparse FP8 compute. The chips are interconnected through NeuronLink in a 2D torus, ensuring high-bandwidth, low-latency communication.

An UltraServer is essentially a combination of four Trn2 instances, connected using NeuronLink. This results in 512 NeuronCores, 64 Trainium2 chips, 6 TiB of HBM, and 185 TB/second of HBM bandwidth. The resulting compute power is remarkable, with up to 83 petaflops of dense FP compute and 332 petaflops of sparse FP8 compute. UltraServers are designed for training and inference tasks involving trillions of parameters and are available in preview form to interested users.

UltraClusters and Distributed Training

Trn2 instances and UltraServers are deployed in EC2 UltraClusters, enabling distributed training across thousands of Trainium chips. These clusters feature a petabit-scale, non-blocking network and have access to Amazon FSx for Lustre, a high-performance storage solution.

Getting Started with Trn2 Instances

Trn2 instances are now available for production use in the US East (Ohio) AWS Region. Users can reserve up to 64 instances for six months using Amazon EC2 Capacity Blocks for ML, with reservations accepted up to eight weeks in advance.

For software, AWS offers Deep Learning AMIs, which are preconfigured with popular frameworks and tools, such as PyTorch and JAX. If you have used the AWS Neuron SDK for your applications, you can easily recompile them for Trn2 instances. The SDK integrates with essential libraries like Hugging Face, PyTorch Lightning, and NeMo, providing out-of-the-box optimizations for distributed training and inference.

To conclude, the introduction of Trn2 instances and UltraServers is a significant step forward in the field of machine learning. These new offerings provide unparalleled compute power, efficiency, and scalability, making them a valuable asset for businesses looking to optimize their ML workloads. For more details, you can explore the official AWS page on Amazon EC2 Trn2 Instances.

For the latest updates, follow Jeff Barr on Twitter.

For more Information, Refer to this article.