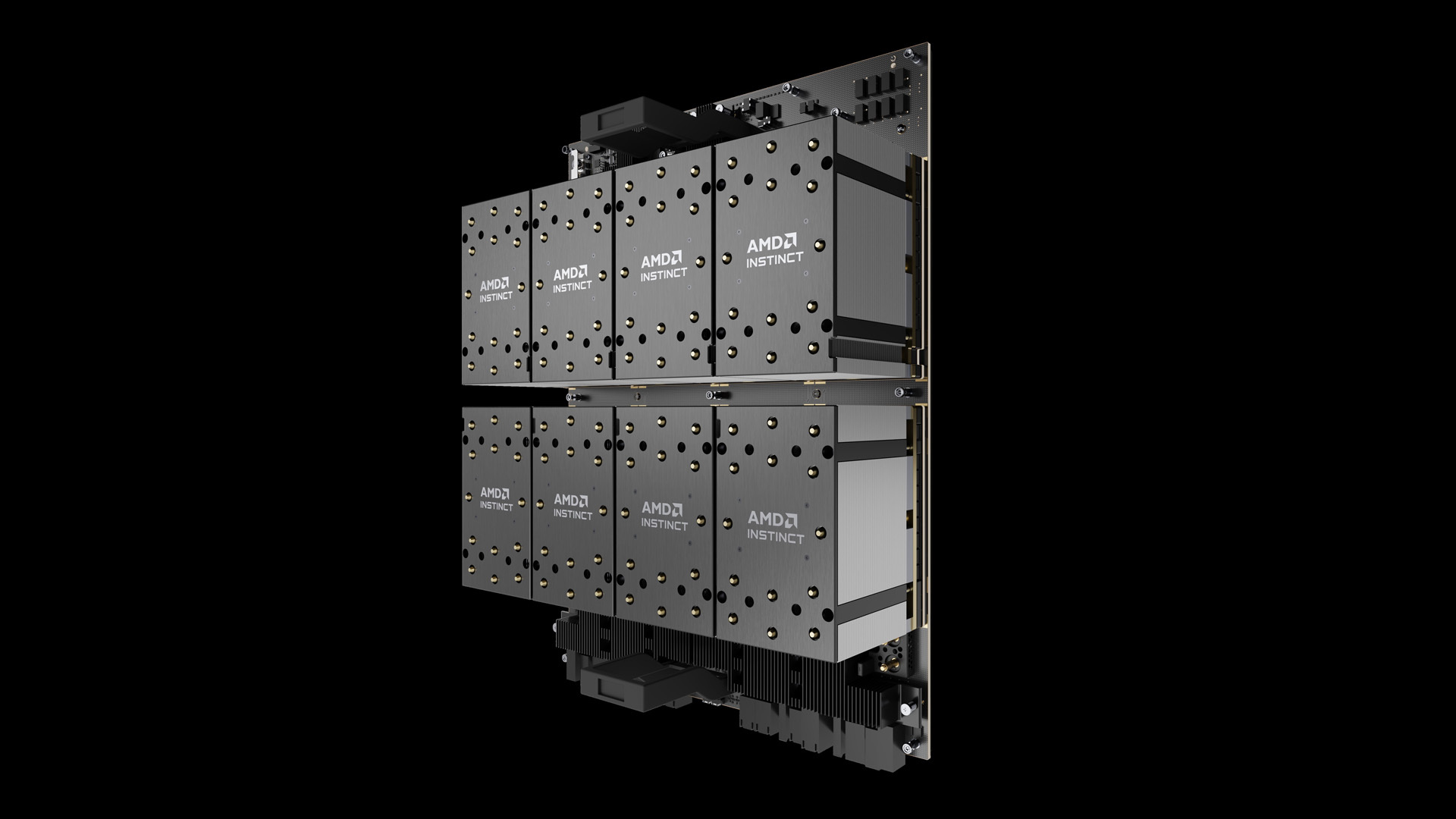

IBM Cloud to Integrate AMD Instinct MI300X Accelerators for Enhanced AI and HPC Performance

November 18, 2024 – Armonk, NY – IBM and AMD have announced a strategic collaboration that aims to revolutionize the performance of generative AI workloads and high-performance computing (HPC) applications. This collaboration will see the deployment of AMD Instinct MI300X accelerators as a service on IBM Cloud, with availability anticipated in the first half of 2025. The goal of this partnership is to significantly boost performance and energy efficiency for enterprises using AI models and HPC applications.

A New Era of AI Performance

The collaboration between IBM and AMD is set to provide substantial advancements in AI capabilities. By integrating AMD Instinct MI300X accelerators, IBM Cloud will offer enhanced performance capacity to handle compute-intensive workloads. These accelerators are designed to support large AI models and datasets, providing the high performance and scalability that modern enterprises demand.

Philip Guido, Executive Vice President and Chief Commercial Officer at AMD, emphasized the importance of this collaboration. He stated, "As enterprises continue adopting larger AI models and datasets, it is critical that the accelerators within the system can process compute-intensive workloads with high performance and flexibility to scale. AMD Instinct accelerators combined with AMD ROCm software offer wide support including IBM watsonx AI, Red Hat Enterprise Linux AI, and Red Hat OpenShift AI platforms to build leading frameworks using these powerful open ecosystem tools. Our collaboration with IBM Cloud will aim to allow customers to execute and scale Gen AI inferencing without hindering cost, performance, or efficiency."

Shared Vision for AI Empowerment

IBM and AMD share a common vision to make AI more accessible and advantageous for enterprises. Alan Peacock, General Manager of IBM Cloud, expressed their commitment to this mission. "AMD and IBM Cloud share the same vision around bringing AI to enterprises. We’re committed to bringing the power of AI to enterprise clients, helping them prioritize their outcomes and ensuring they have the power of choice when it comes to their AI deployments," he said. By leveraging AMD’s accelerators on IBM Cloud, enterprise clients will have an additional option to scale their operations while optimizing both cost and performance.

Key Features and Benefits

The collaboration between IBM and AMD is set to deliver MI300X accelerators as a service on IBM Cloud, providing numerous benefits to enterprise clients across various industries, including those that are heavily regulated. The integration will leverage IBM Cloud’s robust security and compliance features to ensure data protection and regulatory adherence.

Support for Large Model Inferencing

One of the primary advantages of the AMD Instinct MI300X accelerators is their support for large model inferencing. With an impressive 192GB of high-bandwidth memory (HBM3), these accelerators enable enterprises to run larger models with fewer GPUs. This capability can significantly reduce the costs associated with AI inferencing, making it a cost-effective solution for businesses aiming to leverage advanced AI technologies.

Enhanced Performance and Security

The integration of AMD Instinct MI300X accelerators as a service on IBM Cloud Virtual Servers for VPC, along with container support through IBM Cloud Kubernetes Service and IBM Red Hat OpenShift on IBM Cloud, will optimize performance for enterprises running AI applications. This combination of technologies will ensure that businesses can achieve superior performance while maintaining robust security measures.

Expanding AI Infrastructure

For generative AI inferencing workloads, IBM plans to enable support for AMD Instinct MI300X accelerators within its watsonx AI and data platform. This integration will provide watsonx clients with additional AI infrastructure resources, allowing them to scale their AI workloads across hybrid cloud environments. Furthermore, Red Hat Enterprise Linux AI and Red Hat OpenShift AI platforms will be able to run Granite family large language models (LLMs) with alignment tooling using InstructLab on MI300X accelerators. This development represents a significant step forward in the capabilities of AI infrastructure.

Looking Ahead

IBM Cloud’s integration of AMD Instinct MI300X accelerators is expected to be generally available in the first half of 2025. This collaboration marks an important milestone in the advancement of AI and HPC technologies, promising to deliver unprecedented performance and efficiency for enterprise clients.

For those interested in learning more about IBM’s GPU and Accelerator offerings, additional information can be found on the IBM Cloud website.

Future Directions

While the collaboration between IBM and AMD represents a significant advancement in AI and HPC capabilities, it is important to note that statements regarding IBM’s future direction and intent are subject to change or withdrawal without notice. These statements represent goals and objectives only and should be considered in that context.

In conclusion, the collaboration between IBM and AMD is set to redefine the landscape of AI and HPC technologies. By integrating AMD Instinct MI300X accelerators into IBM Cloud, enterprises will have access to powerful tools that enhance performance, scalability, and cost-effectiveness. As this partnership unfolds, it will be exciting to see the innovative solutions that emerge, driving progress and enabling enterprises to achieve new heights in AI and HPC applications. For further updates, keep an eye on announcements from both IBM and AMD in the coming months.

For more Information, Refer to this article.