Transforming Health Diagnostics: Google’s AI-Powered HeAR Model Explores Acoustic Health Data

Our bodies produce various sounds like coughs, speech, and even breath, each carrying a wealth of information about our health. These bioacoustic signals, often overlooked, possess the potential to revolutionize how we screen, diagnose, and manage numerous health conditions, including tuberculosis (TB) and chronic obstructive pulmonary disease (COPD). At Google, our research team has acknowledged the immense potential of these sounds as health indicators. With the ubiquity of smartphone microphones, we’ve been delving into ways to harness artificial intelligence (AI) to extract meaningful health insights from these acoustic data.

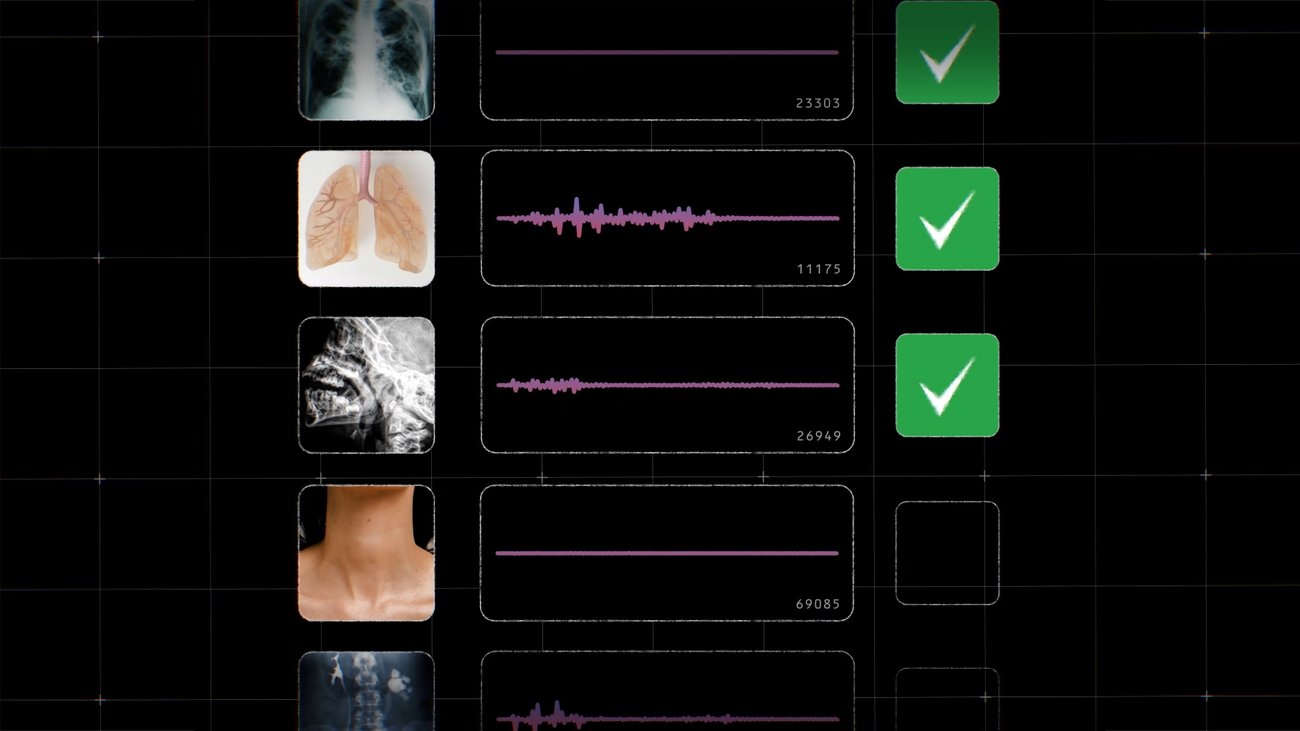

Earlier this year, we introduced Health Acoustic Representations (HeAR), a sophisticated bioacoustic foundation model. HeAR is designed to assist researchers in creating models that analyze human sounds to detect early signs of diseases. The Google Research team trained HeAR using a vast collection of 300 million audio clips from a diverse and anonymized dataset. Specifically, the cough detection model was trained with around 100 million cough sounds.

HeAR excels in identifying patterns within health-related sounds, establishing a robust foundation for medical audio analysis. Our findings indicate that HeAR consistently outperforms other models across various tasks and hardware configurations, demonstrating its superior capability in recognizing significant patterns in acoustic health data. Additionally, models trained with HeAR achieved high performance levels with relatively less data, which is critical in the often data-constrained field of healthcare research.

HeAR is now accessible to researchers, aimed at accelerating the development of specialized bioacoustic models with minimal data, setup, and computational resources. Our objective is to facilitate further research into models tailored for specific conditions and populations, even when data is limited or cost and computational barriers exist.

An example of HeAR’s practical application is seen with Salcit Technologies, an Indian respiratory healthcare company. They have developed a product called Swaasa®, which employs AI to analyze cough sounds and evaluate lung health. Salcit Technologies is now exploring how HeAR can enhance their bioacoustic AI models. Initially, Swaasa® is leveraging HeAR to refine their early detection capabilities for TB based on cough sounds.

TB remains a treatable disease, yet millions of cases go undiagnosed each year due to the lack of convenient access to healthcare services. Enhancing diagnostic methods is crucial to the global initiative to eradicate TB, and AI can significantly improve detection rates, making healthcare more accessible and affordable worldwide. Swaasa® has a history of utilizing machine learning to facilitate early disease detection, bridging gaps in accessibility, affordability, and scalability by offering remote, equipment-free respiratory health assessments. With the integration of HeAR, they aim to extend TB screening more extensively across India, building on their existing research.

"Every missed case of tuberculosis is a tragedy; every late diagnosis, a heartbreak," said Sujay Kakarmath, a product manager at Google Research working on HeAR. "Acoustic biomarkers offer the potential to rewrite this narrative. I am deeply grateful for the role HeAR can play in this transformative journey."

Support for this innovative approach is also evident from organizations like The StopTB Partnership, a United Nations-hosted entity that unites TB experts and affected communities with the mission to end TB by 2030.

"Solutions like HeAR will enable AI-powered acoustic analysis to break new ground in tuberculosis screening and detection, offering a potentially low-impact, accessible tool to those who need it most," stated Zhi Zhen Qin, a digital health specialist with The StopTB Partnership.

HeAR signifies a substantial advancement in acoustic health research. We aim to foster the development of future diagnostic tools and monitoring solutions for TB, chest, lung, and other disease areas, ultimately improving health outcomes for communities globally through our research. Researchers interested in exploring HeAR can learn more and request access to the HeAR API.

Additional Insights and Reactions

The potential of HeAR extends beyond just diagnosing TB. With its ability to generalize across different microphones and perform well with less training data, HeAR can be applied to a broad range of health conditions. This opens up new avenues for researchers and healthcare providers to develop tools that can monitor and manage various diseases more effectively.

For instance, COPD, a chronic inflammatory lung disease, could benefit significantly from such technology. Early detection and continuous monitoring are vital for managing COPD, and HeAR’s ability to analyze cough sounds and other respiratory noises could lead to better patient outcomes.

Moreover, the integration of HeAR into smartphones and other widely accessible devices could democratize healthcare, particularly in remote or underserved areas where traditional diagnostic tools are scarce. This aligns with global health initiatives aiming to provide equitable healthcare access to all.

In terms of user privacy, it’s crucial to note that all data used to train HeAR was de-identified to ensure confidentiality. This practice underscores Google’s commitment to maintaining user trust while advancing healthcare technology.

Expert Opinions

Healthcare professionals and researchers have shown optimism regarding the potential applications of HeAR. Dr. Emily Jones, a pulmonologist, remarked, "The integration of AI in acoustic health analysis is a game-changer. Tools like HeAR can significantly enhance our ability to diagnose and monitor respiratory conditions, making healthcare more proactive and personalized."

Similarly, Dr. Michael Smith, a data scientist specializing in healthcare, noted, "The ability of HeAR to perform well with less data is particularly noteworthy. In many parts of the world, data scarcity is a significant barrier to developing effective health solutions. HeAR’s efficiency could overcome this challenge, paving the way for innovative health diagnostics."

Future Prospects

Looking ahead, the potential applications of HeAR are vast. Beyond respiratory health, HeAR could be adapted to analyze heart sounds, gastrointestinal noises, and even vocal biomarkers for mental health conditions. This versatility makes it a valuable tool in the broader context of health diagnostics and monitoring.

The collaboration between tech companies like Google and healthcare providers is essential for bringing such innovations to market. By working together, they can ensure that these advanced tools are not only effective but also accessible to those who need them the most.

Conclusion

The introduction of Health Acoustic Representations (HeAR) by Google marks a significant milestone in the realm of health diagnostics and monitoring. By leveraging AI to analyze bioacoustic data, HeAR opens up new possibilities for early disease detection and continuous health monitoring. Its potential to make healthcare more accessible, affordable, and effective is immense, particularly in regions with limited medical resources.

As HeAR becomes available to researchers worldwide, it holds the promise of driving forward the development of new diagnostic tools and improving health outcomes on a global scale. For those interested in exploring the capabilities of HeAR, more information and access to the HeAR API can be found on the Google Health GitHub repository.

This advancement epitomizes how technology can transform healthcare, making it more inclusive and efficient. With continued research and collaboration, the future of health diagnostics looks promising, heralding a new era where early detection and timely intervention become the norm, rather than the exception.

For more Information, Refer to this article.